Hii Everyone!

This is Ravi and today, I will let you know about the ChatGPT.

Definition of ChatGPT

ChatGPT is an advanced language model developed by OpenAI, designed to understand and generate human-like text. Built on the GPT (Generative Pre-trained Transformer) architecture, ChatGPT can perform a variety of language-related tasks, such as answering questions, generating creative content, providing explanations, and engaging in conversations.

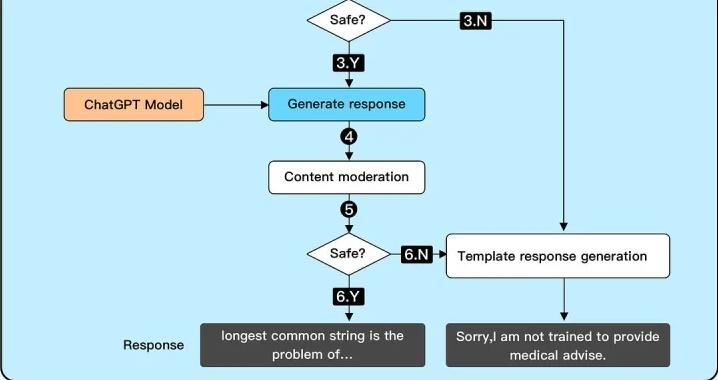

How does ChatGPT work?

ChatGPT operates using a deep learning model known as the Transformer architecture. Here’s a detailed explanation of its working mechanism-

Transformer Architecture

- Attention Mechanism: The Transformer architecture’s core component is the attention mechanism, which allows the model to focus on different parts of the input text. This mechanism helps the model understand the context and relationships between words, regardless of their position in the sentence.

- Self-Attention: In self-attention, each word in a sentence considers every other word, including itself, to capture dependencies and context effectively.

- Multi-Head Attention: This involves several attention mechanisms operating in parallel, enabling the model to focus on various aspects of the input text simultaneously, capturing complex relationships.

Pre-training and Fine-tuning

- Pre-training: Initially, the model is pre-trained on a vast corpus of text from diverse sources. During this phase, it learns to predict the next word in a sentence, developing a broad understanding of language, grammar, and general knowledge.

- Fine-tuning: After pre-training, the model undergoes fine-tuning on a smaller, more specific dataset, guided by human reviewers. This process tailors the model for specific tasks and ensures adherence to usage policies.

Tokenization

- Text input is split into smaller units called tokens, which can be words or subwords. These tokens are then converted into numerical representations that the model can process.

- The model processes these tokenized inputs through multiple Transformer layers, generating context-aware representations of each token.

Generation

- Based on the input tokens and their context, the model generates a sequence of tokens as output. This sequence is then converted back into human-readable text.

- The generation process can be influenced by parameters such as temperature and top-k sampling, affecting the creativity and randomness of the output.

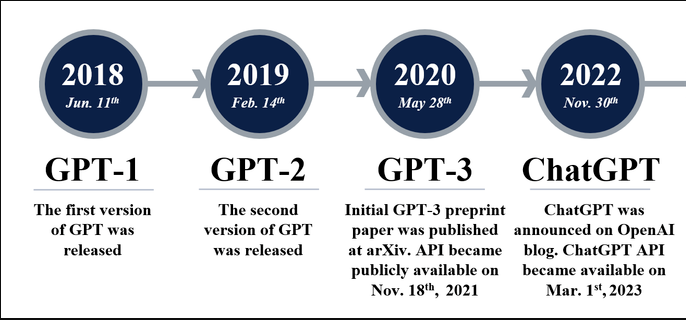

The Evolution of GPT Models

ChatGPT is part of a series of advancements in the GPT models. Here is a brief overview of the evolution-

- GPT (GPT-1)

Released in 2018, GPT-1 was the first model in the series, with 117 million parameters. It showed that pre-training a language model on a large text corpus, followed by fine-tuning on a task-specific dataset, could produce impressive results.

- GPT-2

Released in 2019, GPT-2 significantly increased the scale, with 1.5 billion parameters. It demonstrated the potential of large-scale language models to generate coherent and contextually relevant text. OpenAI initially withheld the full model due to concerns about misuse but later released it in stages.

- GPT-3

Released in 2020, GPT-3 represented a major leap forward with 175 billion parameters. It can perform a wide array of tasks with minimal fine-tuning and has demonstrated capabilities in generating human-like text, answering questions, and even performing tasks like translation and arithmetic.

Conclusion

ChatGPT represents a significant advancement in artificial intelligence and natural language processing. Its foundation on the Transformer architecture and the evolution through various versions of GPT models have enabled it to achieve impressive language understanding and generation capabilities. Through pre-training and fine-tuning, ChatGPT can perform a wide range of language tasks, making it a versatile tool for numerous applications. The ongoing development of GPT models promises even more sophisticated and capable AI systems in the future.

Thanks!